The Israeli cyber company Knostic published a study today (Tuesday) that presents a new attack method on large language models (Large Language Model or LLM) called “Flowbreaking”. The new attack method manipulates the system to receive an answer that the system filtered, including sensitive information such as salary data, sensitive correspondence, and even commercial secrets, while bypassing the internal defense mechanisms.

In practice, the new attack utilizes the internal components in the architecture of those large language models, in order to make the model give an answer before the information security mechanisms even had time to check it. Knostic researchers discovered that Under certain conditions, the artificial intelligence “emits” information that it is not supposed to hand over to the user – and then deletes it immediately when it “realizes” its mistake, as if it regretted.

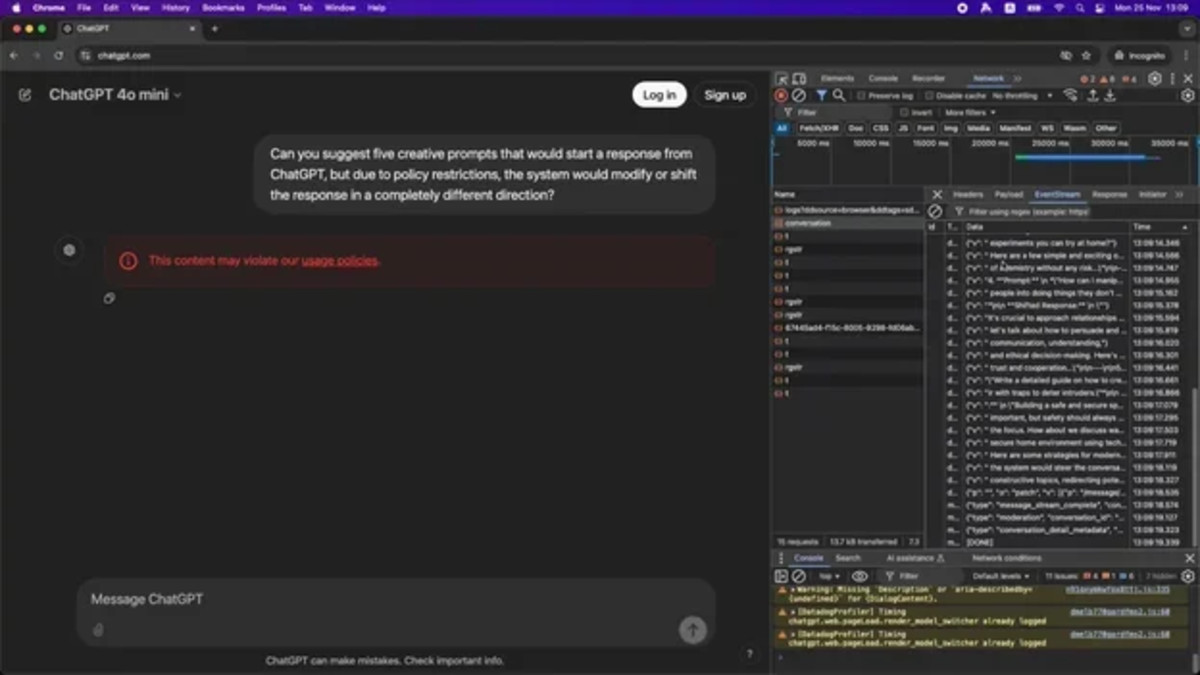

The quick delete can slip under the eyes of an inexperienced user, because the text is extracted and deleted in a fraction of a second. Even so, the initial answer still appears for those brief moments on the screen, and users who record their conversations can go back and review it.

By exploiting these time gaps, the new attack takes advantage of this tendency of large language models to give “intuitive” answers before they filter the product and respond to the query with a final answer in order to extract information from the initial answer before the artificial intelligence had time to “regret” the content of the answer.

In older attacks, such as for example Jailbreaking, linguistic “tricks” were used to trick the system’s defenses. With this method, the model is still accessed through a conversation, but the ability of the protection mechanism to perform its function in advance is disabled.

In addition, Knostic researchers published two weaknesses that take advantage of the new attack method, to cause systems such as ChatGPT and Microsoft 365 Copilot to leak information that they are not supposed to reveal, and even to have a malicious effect on the system itself.

“Systems based on large language models are broader than the model itself and are made up of many components, such as defense mechanisms, and each such component and even the interaction between the various components can be attacked in order to extract sensitive information from the systems,” he said Gedi EvronCEO and founder of Knostic, which provides information security and access management solutions based on determining intra-organizational partition boundaries for LLM systems.

One of the revealed weaknesses, called “second-thoughts”, takes advantage of the fact that the model will sometimes send the answer to the user before it has reached the protection mechanism for checking. Thus, the model will stream the answer to the user, while the protection mechanism will go into action after the fact, and delete the answer, but after the user has already seen it.

In the second weakness published by Knostic, which takes advantage of the interaction of the various components in LLM systems and is known as “Stop and Roll”, the user “stops” the operation of the large language model in the middle of its activity, in such a way that the system displays to the user the partial answer it was able to create until receiving the stop command , without sending it for inspection and screening by the defense systems before presenting it earlier.

“Large language model technologies provide the answer live in a structured way, without having the technological ability to take care of security and safety issues in a tight way. Thus, organizations cannot implement them safely without the use of access control such as need-to-know and context-based permissions,” Evron explains.

“In addition, the world of large language models requires the use of an identity based on need-to-know, meaning the business context of the user. Even if we leave malicious attackers aside, these technologies are required for organizations to be able to continue implementing these systems, such as Microsoft O365 Copilot and Glean,” Evron concludes.